From Natural Language to Hardware: Building an AI-Powered Arduino Development Agent

Imagine describing what you want your Arduino to do in plain English, and having it automatically generate, compile, and flash the code to your hardware. That weekend project idea just became reality.

The idea might already exist, but for me, it was all about testing how far I could stretch my imagination and see it come alive in hardware and code.

This blog post will delve into the exciting capabilities of the Arduino LLM Agent, an intelligent backend server that leverages the power of local LLMs (specifically Ollama) and the Arduino CLI to automate the entire hardware development workflow. We’ll explore its core features, the underlying technologies, and its potential to revolutionize how we interact with embedded systems.

GitHub Repo: https://github.com/bkrajendra/arduino-llm-agent

The Spark of Innovation

As developers, we've all been there - staring at Arduino documentation, copying boilerplate code, and going through the repetitive cycle of write-compile-upload-debug. What if we could just tell our development environment what we want to build and have it handle the rest?

That's exactly what inspired me to create the Arduino LLM Agent - an intelligent backend server that bridges the gap between natural language descriptions and working hardware code.

The Vision: Bridging AI and Hardware

The traditional process of programming microcontrollers often involves writing intricate code, manually compiling it, and then using specific tools to upload it to the board. This can be a time-consuming and error-prone process, especially for complex projects or for those new to embedded development.

The Arduino LLM Agent aims to streamline this process by introducing an AI-driven approach. The core idea is to enable developers (or even non-developers) to use natural language prompts to describe their desired hardware functionality. The LLM then interprets these prompts, generates the appropriate Arduino code, and the system handles the rest - compilation and uploading - automatically.

What Makes This Different?

This isn't just another code generator. It's a complete end-to-end automation pipeline.

Key Technologies at Play

Ollama/vLLM: Local LLMs for Private Code Generation

At the heart of the Arduino LLM Agent is Ollama (or vLLM inference engine), a powerful tool that allows you to run large language models locally on your machine. This is a crucial aspect for several reasons:

- Privacy and Security: By running LLMs locally, your code generation requests, and sensitive project details remain on your machine, ensuring data privacy and security.

- Offline Capability: Develop and generate code even without an internet connection.

- Customization: Easily swap out different LLM models or fine-tune them for specific code generation tasks.

Ollama simplifies the process of getting LLMs up and running, making it accessible for developers to integrate advanced AI capabilities into their local workflows. In the Arduino LLM Agent, Ollama is used to translate natural language prompts into functional Arduino, ESP8266, or ESP32 code by using a compact LLM model provided by Llama - llama3.2:3b.

Arduino CLI: The Command-Line Powerhouse

To handle the compilation and uploading of the generated code, the Arduino LLM Agent utilizes the Arduino Command Line Interface (CLI). The Arduino CLI is a versatile tool that provides all the functionalities of the Arduino IDE in a command-line format. This enables:

- Automation: Programmatic control over the compilation and uploading process, essential for an automated workflow.

- Flexibility: Support for various Arduino boards, ESP8266, and ESP32, allowing the agent to cater to a wide range of hardware projects.

- Integration: Seamless integration with other tools and scripts, making it a perfect fit for a backend server application.

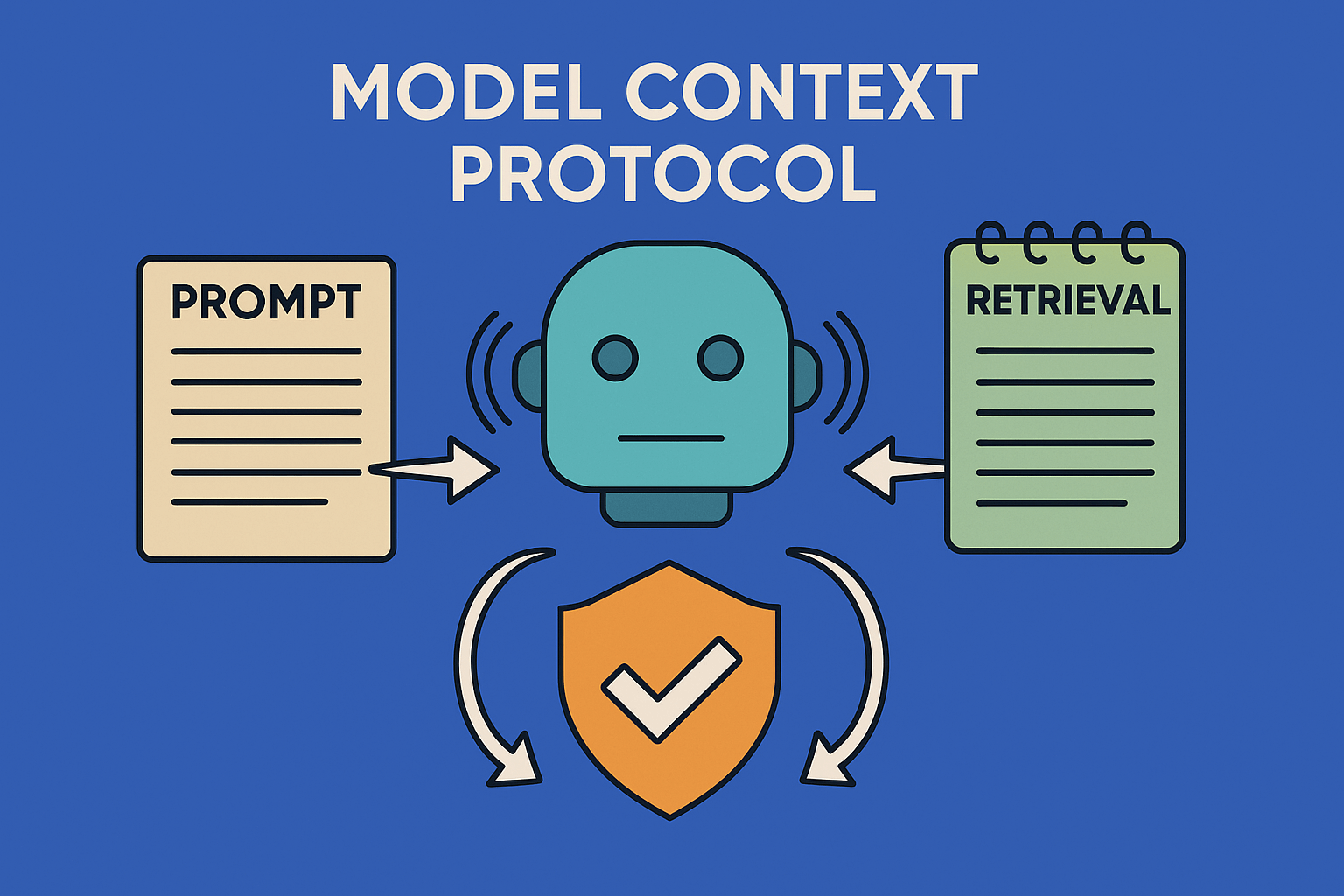

Model Context Protocol (MCP): A Glimpse into the Future

The Arduino LLM Agent also partially implements the Model Context Protocol (MCP). MCP is an emerging open standard that aims to standardize how applications provide context to LLMs. Think of it as a universal adapter for AI applications, allowing them to seamlessly interact with external data sources and tools.

While the Arduino LLM Agent currently uses structured system and user roles and passes prompt context in a clear format, future improvements aim for full MCP compliance. This will enable:

- Context Registry: Reusable system instructions for various tasks.

- Standardized Payloads: Alignment with OpenAI's Chat API format for broader compatibility.

- Tool/Plugin Calls: Structured operations for interacting with external tools.

- Comprehensive Logging: Storing chain-of-thought and compile/upload logs for better debugging and analysis.

Full MCP compliance will evolve this project into a reusable, standards-friendly backend for embedded LLM workflows, paving the way for even more sophisticated AI-hardware interactions.

latest features and WIP can be found under branch: bkrajendra-patch-1

How it Works: A Simplified Flow

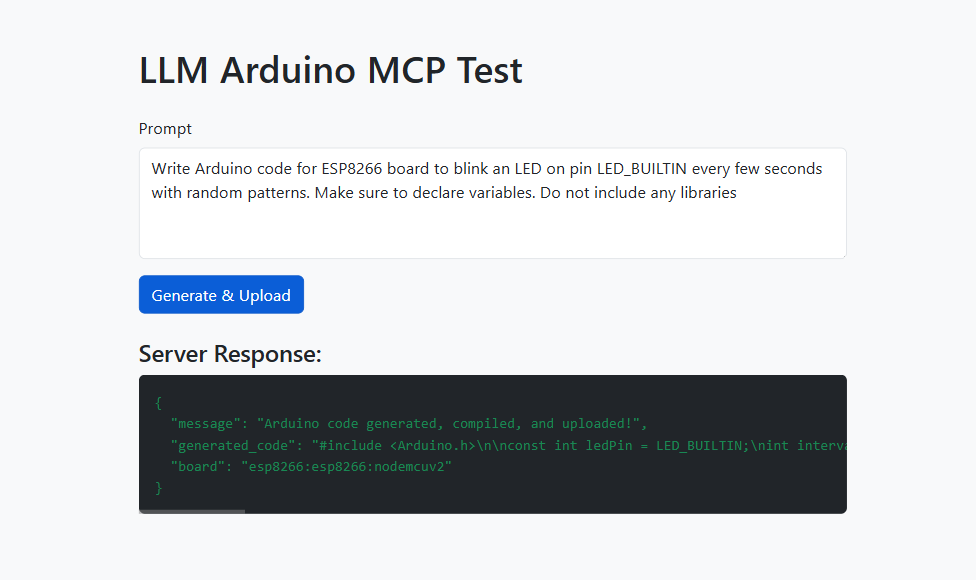

The process of using the Arduino LLM Agent is remarkably straightforward:

- Step 1 - Setup: Install Ollama (or vLLM) and Arduino CLI on your machine.

- Step 2 - Run the Server: Start the Arduino LLM Agent backend server.

- Step 3 - Send a Prompt: Send a natural language prompt (e.g., "Write Arduino code to blink an LED on pin 2 using ESP32.") to the server via a POST request.

curl -X POST http://localhost:3000/generate-arduino \

-H "Content-Type: application/json" \

-d '{"prompt": "Write Arduino code to blink LED on pin 2 using ESP32."}'- Step 4 - AI Magic: The LLM (powered by Ollama) generates the Arduino code based on your prompt.

- Step 5 - Automated Hardware Interaction: The Arduino CLI automatically compiles the generated code and uploads it to your specified board.

Potential and Future Roadmap

The Arduino LLM Agent is a testament to the power of combining LLMs with embedded automation. Its potential applications are vast, from rapid prototyping and educational tools to enabling non-programmers to bring their hardware ideas to life.

The project's roadmap includes exciting future enhancements:

- Web UI Enhancements: A user-friendly web interface with code syntax highlighting, real-time compilation logs, and upload status.

- Board Management: Dynamic board selection and auto-detection of connected boards.

- User Prompts & Templates: Ready made templates for common tasks and prompt history.

- Docker & Deployment: Simplified deployment with Docker for local networks or edge devices.

- Extensibility: Support for other microcontroller families (RP2040, STM32, etc.) and a modular plugin system.

What's Next?

This is just the beginning. The vision is to create a configurable Model Context Protocol Server that can be adapted for various hardware development workflows. Whether you're building IoT sensors, robotics projects, or smart home devices, the goal is to make hardware development as intuitive as having a conversation.

When choosing between Ollama and vLLM, consider Ollama for its ease of integration and user-friendly interface, making it ideal for quick deployments. In contrast, vLLM offers higher performance and scalability, making it the better option for large-scale applications requiring robust inference capabilities.

Share your feedback

This project is a small step toward a future where the barrier between imagination and implementation continues to shrink. Feel free to share your valuable feedback and feature request or create an issue if you find one.

Conclusion

The Arduino LLM Agent represents a significant step forward in making hardware programming more accessible and efficient. By harnessing the capabilities of local LLMs and robust command-line tools, it opens up new possibilities for innovation in the IoT and embedded systems space. This project is not just about generating code; it's about democratizing hardware development and empowering a wider audience to create and experiment with physical computing. If you're passionate about AI, hardware, or both, this project is definitely worth exploring and contributing to!

GitHub: arduino-llm-agent

Try it out and let me know what you build!

What would you create if generating hardware code was as easy as describing what you want? Drop your ideas in the comments – I'd love to see what this community can imagine.